HTML 5 Forms - a spammers paradise

Posted on by Steve Workman About 2 min reading time

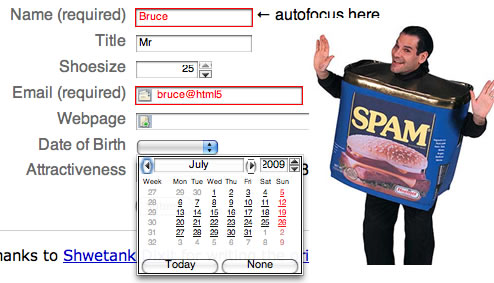

Did you know, HTML 5, the spec that will be completed in 2022, but with some bits available now, will have a whole new set of form elements designed to make complex forms available natively from the browser. I've been to a few talks where Opera's Bruce Lawson has demoed and talked about these upcoming features that have been implemented in the Opera browser. From an accessibility standpoint it looks great; no longer will screen readers have to rely on labels to infer the type of data to be entered into forms. From a developer's standpoint, you won't have to code javascript date pickers any more, nor have to rely on javascript for validation.

Did you know, HTML 5, the spec that will be completed in 2022, but with some bits available now, will have a whole new set of form elements designed to make complex forms available natively from the browser. I've been to a few talks where Opera's Bruce Lawson has demoed and talked about these upcoming features that have been implemented in the Opera browser. From an accessibility standpoint it looks great; no longer will screen readers have to rely on labels to infer the type of data to be entered into forms. From a developer's standpoint, you won't have to code javascript date pickers any more, nor have to rely on javascript for validation.

So, all of this makes it easier to enter data on the web, a great thing. I asked the question this morning, "who enters the most data on the internet?". The answer is spammers. It is generally thought that 90% of all e-mail sent is spam, and a quick glance at my blog's spam counter sees 7,300 fake comments caught compared to 56 real comments.

So, why will HTML 5 forms be such a problem? Well, at the moment, spammers use automated tools to crawl the internet, looking for forms to fill in to spread their advertising links or perform XSS attacks. To bypass most validation, the crawlers look for labeled form fields to fill in. Quite simply, HTML 5 forms will make this job easier.

Instead of labelling forms with "e-mail", there's now a specific input type which validate an e-mail address. Common anti-spam methods of adding a second e-mail field hidden to normal users will be ignored as there is a clear (and CSS visible) e-mail address field.

Forms validation may be useful for the normal user, but it's even more useful for the spammer. With limits of input fields now being contained in plain text in the input, it makes it trivial for bots to enter correct data.

So, what can be done about this? Well, I'm not sure. There are some anti-spam methods that will still work, for instance timing the entrance to the page and seeing how long it took to complete the form. Very short times are spam, short times are sent for moderation and normal times are approved. There's captcha, which is inaccessible and then there's blacklisting, which hasn't worked for years.

If you have any theories, please share them here. If there's a solution or something the working group can do to make spam more difficult rather than easier, it should get into the spec sooner, rather than later.